Next: Saving the newly calculated

Up: Parallelizing matrix-vector multiplication

Previous: Block-checkerboard partitioning

Contents

A Sparse Matrix is a matrix which has very few non-zero entries. The general

definition of "sparseness" is if one has an  non-singular matrix, then the

matrix is sparse if;

non-singular matrix, then the

matrix is sparse if;

the number of non-zeros

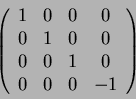

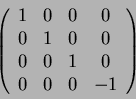

Every operation in Quantum Computing can be represented by a matrix, with the

requirement that the matrix be unitary. A general trait of a unitary matrix

is that it has a large number of zeros, although this is not always the case.

The following matrix is an example of a sparse unitary matrix. It is called

the controlled-Z gate.

It is highly inefficient in terms of both memory storage and network bandwidth

to distribute a highly sparse matrix in normal rectangular format over the

network. For example, if one were to distribute an 8x8 matrix, such as the

Toffoli gate, one would be sending 64 numbers, only 8 of which would be

non-zeros!

An efficient way of storing sparse matrices is called the

Compressed Sparse Row format. Instead of a single large rectangular

array, three arrays are used to store the matrix. The first array contains

the non-zeros of the array, starting from the first row, and reading from

left to right across each row. The second array contains the corresponding

column subscripts of the entries in the first array. The third array contains

the subscript of the first number in each row. Instead of storing  numbers,

as in the standard rectangular matrix format, the CSR format stores just q

non-zero numbers, and n+q integers, which are the row and column subscripts.

numbers,

as in the standard rectangular matrix format, the CSR format stores just q

non-zero numbers, and n+q integers, which are the row and column subscripts.

In the QCL code, the matrix is transformed into CSR format. The array of row

subscripts is broadcast to all the nodes, as is the qubit vector. The root node

then sends out a portion of the non-zero values, and column indices to each

node. This saves space on just broadcasting the whole arrays over the network.

Each node the computes it's own result, and sends the result back to the head

node.

Next: Saving the newly calculated

Up: Parallelizing matrix-vector multiplication

Previous: Block-checkerboard partitioning

Contents

Colm O hEigeartaigh

2003-05-30